Ollama

Website: https://ollama.ai (opens in a new tab)

Ollama enables you to run open-source large language models, such as Llama 3, on your local machine.

Getting Started

Follow these steps to get started:

Download the Client

First, download the Ollama client if you don't already have it. You can download it here (opens in a new tab).

Run Your Model

Open terminal and run the following command:

ollama run codellamaThis command will download the model (if it doesn't already exist) and run it. This step is necessary before using the model in the plugin.

Configure the Plugin

Next, connect Ollama with the plugin:

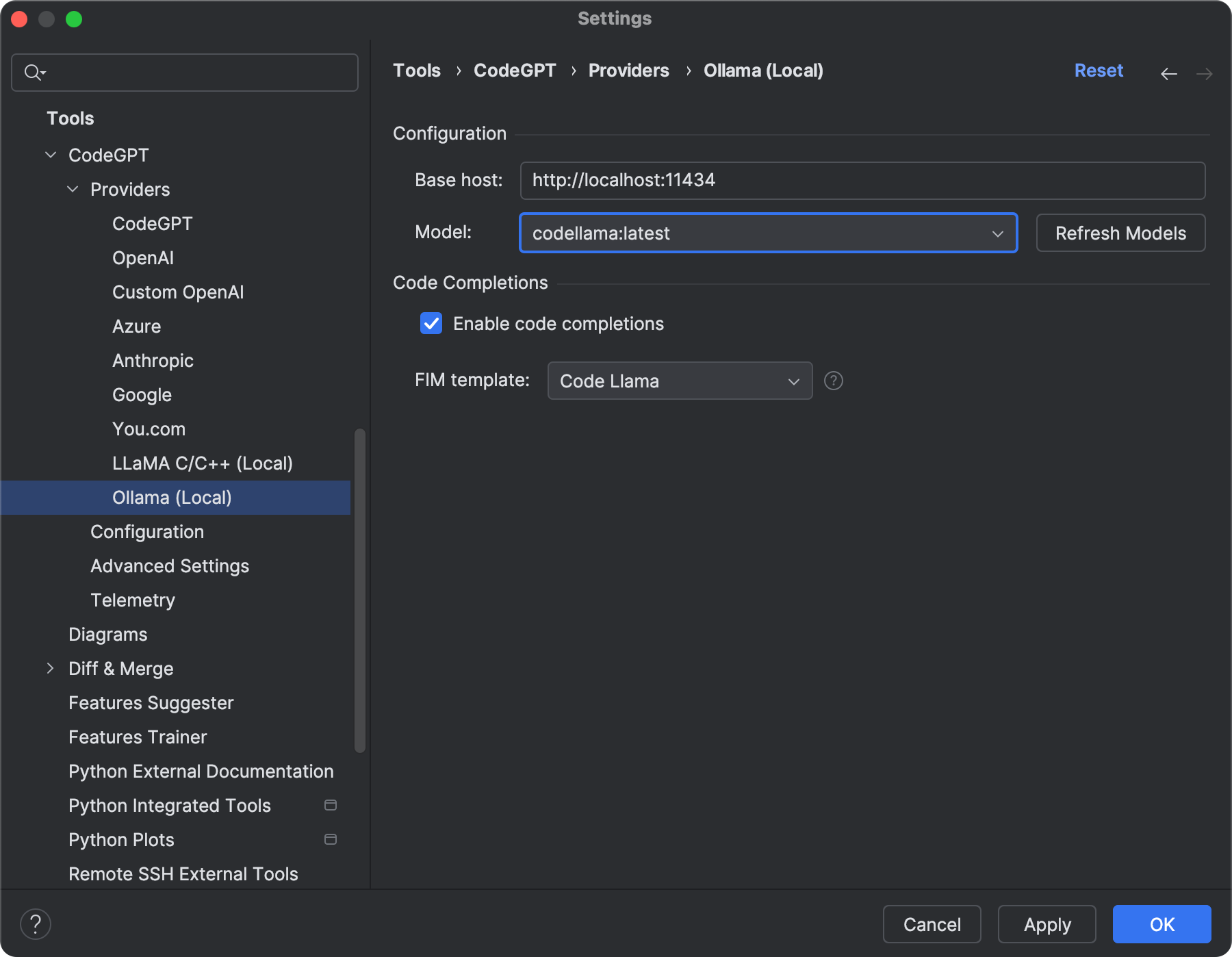

- Navigate to the plugin's settings via File > Settings/Preferences > Tools > ProxyAI > Providers > Ollama (Local).

- Click

Refresh Modelsto sync all Ollama models with the plugin. - Optionally, choose the appropriate FIM template for code completions. Before enabling code completions, ensure that the model supports fill-in-the-Middle (FIM).

- Click

ApplyorOKto save your changes.

Integration with DeepSeek R1

Follow this guide (opens in a new tab) to set up DeepSeek R1 locally on a Mac. This guide covers installing ProxyAI, configuring Ollama, and using a local Large Language Model for secure, AI-assisted coding without relying on public LLMs.